Read more.Users can fit up to 1TB of RAM thanks to the 16 DIMM sockets on this E-ATX board.

Read more.Users can fit up to 1TB of RAM thanks to the 16 DIMM sockets on this E-ATX board.

well to make use of all the ram you lose half the pcie slots so thats no good for a gpu rendering machine

PCIe risers might help there.

Oh no! That's really dumb, because there is no other use for a server or workstation apart from GPU rendering...

Oh wait, no, that's not true

Most of the servers I've run/maintained have had no expansion cards, or maybe one SCSI card which was no longer than the slot. There's certainly no reason to think that not being able to get very long cards into all of the PCIe slots is a major obstacle to Gigabyte selling these boards - that's only one, relatively small, potential market they're not addressing.

Besides, not all accelerators and professional cards are long. The Instinct MI8 is a short board dual slot card intended for AI, and the WX5100 is a short board single slot professional workstation card. You could stack a number of either of those into this board...

Pleiades (23-06-2017)

Two additional 8 pin power as well as the standard 24 pin for 1 CPU. Requires a fair bit of power then.

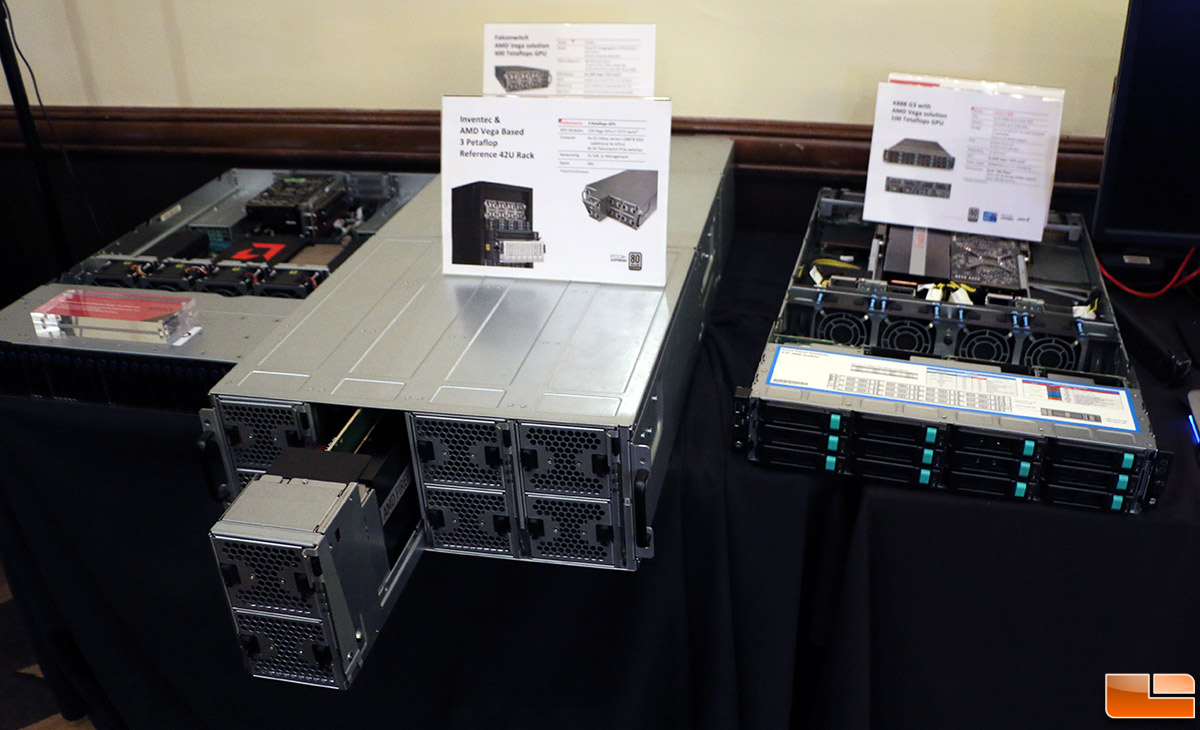

But EPYC is designed for systems that DO utilise the the gpu for processing....it's part of their marketing too (deep learning which links to their radeon pro stuff)

If this is meant for what you describe why all the slots (Up to 4 x PCIe Gen3 x16 slots and 3 x PCIe Gen3 x8 slots) then.... Also in my experience if you're going to use a server for gpu processing you'll go for the highest spec gpus you can afford so you get the best performance, the top of the line are still longer length. If you're going for lower end cards you likely don't need all the ram either because of the lower gpu memory to start with.

IIRC Fury X's PCB was pretty short, that's how they got away with making Nano, right? This was because they swapped GDDR5 for HBM stacks. The pro Pascal GPUs use HBM, and so will Vega. So those PCBs could still be short enough, possibly? You'd need to throw away the cooling they came with and get custom water blocks I would assume. Sure, it's expensive stuff but if you already bought out on 1TB of 2666MHz LRDIMM, you're probably crazy enough to do this too, right? Someone must know.

Server GPUs shouldn't have fans. Rather the server chassis should have little 10,000 RPM screaming Delta fans or similar.

Some of the shown Threadripper boards do have the socket at the 'top' (so used to tower layouts, but basically away from the expansion slots behind the motherboard ports). However, the shown AMD reference EPYC demo motherboard has a similar layout to this as it's a dual CPU board.

2 8pin for that 300 W +.

But...but...no RGB?

GPU rendering is not the only use of a server.

There are all sorts of server loads. Epyc is designed to address many of them. AMD are bigging up the fact that they're good for GPU acceleration, but that's a side effect of the high PCIe lane count they've been able to plumb out, which itself is a side effect of them going quad-die MCM. GPU acceleration is a relatively niche workload in the general server market, so it'd be utterly stupid of AMD to target just that market.

This board is not designed for high-end GPU rendering machines. But it does address a lot of server workloads. The fact that it offers 16 SATA ports might give you an indication of where Gigabyte think it might be used. EPYC's real selling point is the ability to run many threads at low TDPs with massive memory capacity and bandwidth. This board is clearly intended to make the best of those features in 1P servers and workstations, making use of a standard motherboard format. Other boards will be produced which target high-end GPU rendering workloads.

Last edited by scaryjim; 25-06-2017 at 03:53 PM.

The graphics cards are most likely connected via cables or risers.

aidanjt (26-06-2017)

Let's be honest, there are a ton of ways of using all those PCIe slots. You could load it with storage controllers for a phenomenal amount of SAS/SATA bandwidth. You could use risers - either rigid or flexible - to carry the signals to cards laid out in other parts of the case to drive a huge GPU-based render farm. And since AMD do lower cost 1P EPYC processors, I still maintain that it has a place as an in-lab AI training platform loaded with 4 MI8 accelerators (I certainly know researchers who'd consider blowing a chunk of their budget on a tower loaded with MI8s just to see what they could do with it).

The basic point is, the fact that you can't load it full of RAM and stick long graphics cards directly into the PCIe slots doesn't make it any less worthwhile.

There are currently 1 users browsing this thread. (0 members and 1 guests)