Firstly DX12 is not a rebadged Mantle, AMD donated the code for Mantle to the Kronos group, that's not to say the development of Mantle had nothing to do forcing Microsoft to pull their finger out their ass and start development on DX12, it's just to say that the two different API's are exactly that, different ways of addressing the same problem.

Secondly the developers of the Oxide Nitrous engine, the engine that Ashes of the Singularity uses, those developers worked very closely with AMD on the development of Mantle for many years and visa versa, when it came to porting it over to DX12 most of the heavy lifting had already been done, on a AMD API and AMD cards, is it any surprise that AMD cards see big increases in performance on a benchmark that they've helped develop?

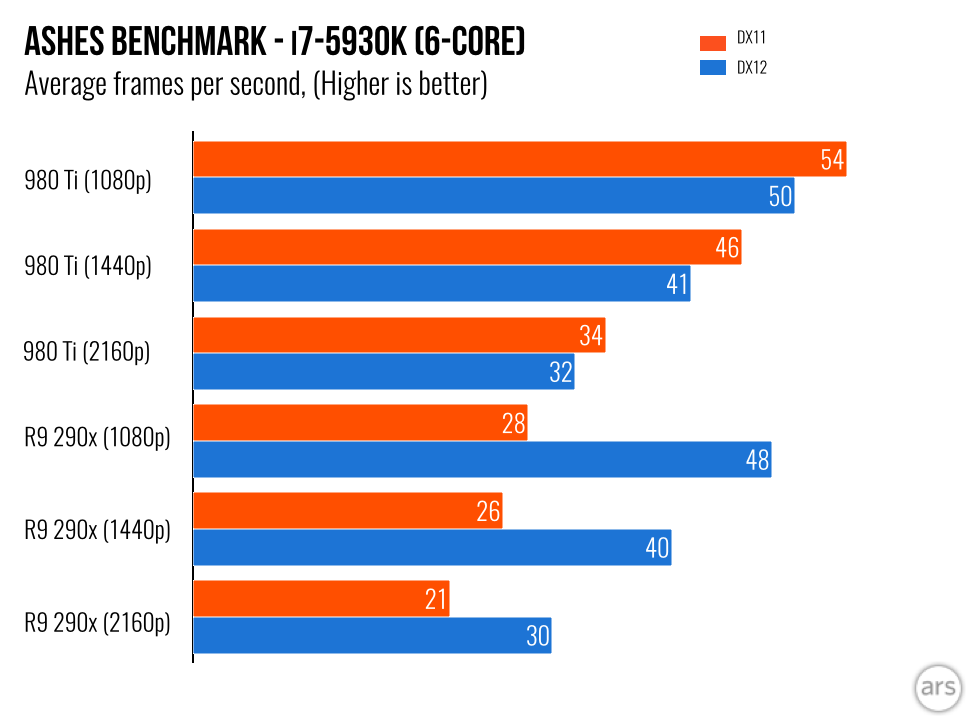

How has it backfired? IIRC the Ashes of the Singularity benchmarks, ones that disabled AA because of the claimed bug, those benchmarks show that yes AMD saw massive gains when going from DX11 to DX12 but it didn't magically make them faster than the equivalent offering from Nvidia, at best the AMD cards were within a few percentage points of their competitor.

I mean we are talking about a R9 390X being at most 10% faster than a GTX 980 when running a DX12 benchmark, but OVER 95% slower when running the same benchmark in DX11.

Given the choice between a card that's £50 cheaper, that isn't very good with DX11, a card that's dependent on DX12 and game developers/engines to match the performance of the more expensive card, I know what card I would choose.

You're conflating the two, Mantle is not a hardware driver it's an API, the two are very, very different, and having Mantle or any other API doesn't mean you don't still need hardware drivers, and developers writing and optimising the code for said drivers.

GPUs are built however the manufacturer decides they should be built, be that parallel processing or serial, and those decisions are taken by looking into a crystal ball and then designing your hardware in a way that works best with how you see the future panning out, AMD gambled on a future ecosystem with heavy dependency on parallel processing, Nvidia gambled on serial processing, it turned out Nvidia's gamble paid off and AMD had to force the issue.

I can't argue with DX11 being an old technology, DirectX became old technology when Microsoft decided to get into the console market over 15 years ago, but that's another story.

Are those results with AA off, or on ? Because with AA off we get a very different picture...

Originally Posted by PCPer

LinkBack URL

LinkBack URL About LinkBacks

About LinkBacks

Reply With Quote

Reply With Quote