There fixed that for you:

http://www.pcgameshardware.de/Vega-C...ion-1232684/3/

as people might have a hard time finding a CB article with PCGH graphics!

There fixed that for you:

http://www.pcgameshardware.de/Vega-C...ion-1232684/3/

as people might have a hard time finding a CB article with PCGH graphics!

Yes was tempted to post the FP benches, but really it needs a perf/watt calculation for both Povray (the FP) and MySQL and I couldn't be bothered to make those!

Needless to say, the FP is a crazy win for AMD but the database is a win for Intel (but not as big I think).

Didn't see any VM benches and although they might be hard to bench (since the CPU loads of VMs are a totally open-ended thing), you'd think EPYC would do very well there. And you'd think if someone needs to deploy 1000s of VMs, if 80% or more run well on EPYC and only 10% run significantly better on Skylake-EP, it would make sense to split the load.

Of course, for smaller players now that there are such things as encrypted VMs, migrating between Intel and AMD might be a problem.

That Intel troll on the comments does have a minor point: anyone deploying non-propriatary software would benefit from it being compiled for the CPU. Of course, custom compiles and optimisations is where someone needing 1000s, 10,000s or even 100,000s of servers could really benefit from EPYC. You'd think that Google, Amazon etc. will be buying quite a lot of AMD chips not only because AMD are likely to give them a great deal, but also as they want competition. Google might even be interested in a custom Zen server with their own silicon included which Intel are unlikely to offer any day soon.

I'm not sure which troll you mean (AAT comments tend to be full of them!), but both platforms can likely benefit from specific optimisations. For example they mention memory settings in the database section, and on top of that, some database software might benefit from being 'aware' of Ryzen's fairly unique cache architecture - even with Threadripper/EPYC, you're still stuck with the same effective L3 cache per core as the base Ryzen CPU, so you're bound to have some performance issues if the software is expecting either a huge LLC (no prefetcher on the L3) or is doing lots of cross-fabric accesses. That's not trying to play anything down, but given we're seeing something similar with Intel's mesh architecture (the fanboys going on about the ring bus being superior have either gone silent or switched to hyping Coffee Lake instead), we're likely to see cache architectures more carefully considered in software design, particularly server applications such as these.

I imagine the MCM design makes inventory control that bit simpler too (not to mention no extra mask costs, etc) - just keep producing lots and lots of Ryzen dies at GloFo and divide them up between the different package configurations as the market demands. I could see that allowing a far more rapid response to a changing market than having to change orders from the fabs. I read 14/16nm turnaround time is something like 3 months from start to finished silicon!

Intel seems desperate:

https://www.techpowerup.com/235092/i...ial-slide-deck

Edit!!

It seems they quoted Wccftech - WTF??

Second Edit:

Oh no:

http://pc.watch.impress.co.jp/img/pc...tml/1.jpg.html

Last edited by CAT-THE-FIFTH; 12-07-2017 at 03:20 PM.

When I first heard about this I reckoned Intel had gone the wrong way - they should've been claiming that Ryzen was just a chopped up server chip and not suitable for desktop computers!

Definite desperation from Intel - they know they don't have technical leadership in servers any more so they're going down the FUD route ... "you'll have to work harder if you transition to AMD", "you'll have to re-optimise software", "the ecosystem isn't in place", "they've not got a proven track record of delivering to the data centre" (that last one is bull, btw.). ... sad to see really, and a little surprising - I wouldn't've thought they were that sensitive to revenues in server...

It just seems such a terrible waste of resources, both hardware and fuel, to keep doing it this way. Aside from the difficulty of getting hold of them initially, if they insist on PoW, surely ASICs are far less damaging all around? Or better still, something which doesn't use more power than a smallish country and end up tracking with fuel prices based on the currency they're supposedly decoupling from in the first place!

Aside from the people that openly admit they're trying to make a quick buck from mining, there are those who say it's more of a hobby thing - have video games really gotten that boring??Don't get me wrong I'm into building as much as the next guy, but I draw the line at just pouring kWh after kWh down the drain even if I break even financially!

Anyway, even if CPUs turn out to be popular for 'mining', perhaps their higher volume would minimise the market impact, along with the fact you also need memory and motherboard to go with them which lowers their comparable value when you're putting a farm together.

LOL:

https://mobile.twitter.com/IanCutres...69342001508353

Originally Posted by Ian Cutress

Originally Posted by Kevin Krewill

Originally Posted by Ryan Shrout

If you're GPU mining you also need a CPU/motherboard/RAM, so I don't think it's that big a difference.

If the workloads are anything like folding, you could invest in a Ryzen 7 and a couple of GPUs, dedicate a couple of CPU threads to managing ther GPU mining, and use the rest of the CPU resource to mine a different currency. That way you're diversifying your cryptocurrency portfolio and not wasting those idle CPU cycles.

It's not a huge market, but it's a clever ploy by AMD because it's one field where they have a performance, efficiency and cost advantage over Intel. And right now they need to hit any market they can possibly sell into, to get the revenues moving and increase market share...

What I mean is you need each of those for *one* CPU - with GPU mining, people are sticking 8+ GPUs per system which reduces the overheads per processor.

You're not really wasting anything as the cores will be power gated and using barely any power if they're left idle.

Off on a bit of a tangent, but something I've been saying for a while is it would be interesting to use waste heat to e.g. feed into central heating so it's not truly wasted. Given electricity prices are far higher than gas in the UK it's perhaps not terribly practical if it's not profitable anyway, but it's an interesting concept to get something done with the electricity on the way to it becoming heat, rather than simply running it through a heating element. I know some universites do something similar.

It's not the waste energy I was thinking of - it's the silicon sitting there unused and unlovedIf you've got 16 threads in your machine and you're only running mining software on GPUs, you're going to have a lot of silicon that could be assigned to another task; so why not do some CPU mining as well?

It's already being done: http://www.bbc.co.uk/news/magazine-32816775

That's a 2015 article about a company selling people e-radiators that form a distributed computing network.

There's a number of issues that I don't think have been entirely resolved yet, the biggest one being scale; even if you stacked 8 RX 580s in a box, you'd still only be at about 1/10th the power of a small domestic boiler. Enough for a room heater, perhaps (like the radiator above), but not much more. And if you wanted to harvest that heat and take it somewhere else, you've got a lot of additional complexity and a reduction in efficiency. Heat really doesn't like being contained, after all...

EDIT:

Actually had another thought on GPU mining. If you're going to load up with Polaris based cards (which seems to be the popular choice), I'd be interested to know thye kind of power consumption reductions you can get by tweaking the clock speeds down to ~ 1GHz, perhaps a little less. I bet you wouldn't lose much performance but the power consumption would drop dramatically...

Last edited by scaryjim; 13-07-2017 at 04:01 PM.

Purpose-build mining rigs tend to use the cheapest Celeon they can find - note how they're also out of stock in many places.

It's already being done: http://www.bbc.co.uk/news/magazine-32816775

That's a 2015 article about a company selling people e-radiators that form a distributed computing network.

There's a number of issues that I don't think have been entirely resolved yet, the biggest one being scale; even if you stacked 8 RX 580s in a box, you'd still only be at about 1/10th the power of a small domestic boiler. Enough for a room heater, perhaps (like the radiator above), but not much more. And if you wanted to harvest that heat and take it somewhere else, you've got a lot of additional complexity and a reduction in efficiency. Heat really doesn't like being contained, after all...[QUOTE=scaryjim;3833596]

I do vaguely remember those heaters now, but yeah you'd need a lot of silicon to heat a whole house in winter. Maybe in places where gas isn't so cheap, it would make sense to recycle older hardware for this sort of use e.g. for folding or whatever.

I think that's fairly common practice - I don't really have more than a passing interest but I have read a few times about how cards can be undervolted and/or underclocked to improve overall efficiency, and therefore profitability.

If these Ryzen 3 prices are true,AMD is onto a winner:

http://wccftech.com/amd-ryzen-3-1300...th-july-month/

Edit!!

Interesting article:

https://www.nextplatform.com/2017/07...ies-scale-amd/

Last edited by CAT-THE-FIFTH; 14-07-2017 at 12:30 AM.

Someone on Reddit has received their water cooler RX Vega card.

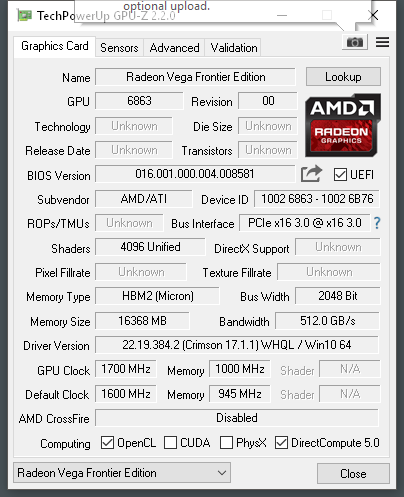

The person on Reddit who got the water cooled RX Vega card,got it up to 1.7GHZ!!

Is that an RX Vega, or a Vega Frontier Edition? I didn't think RX was due for another month...

I'd guess it's big because they use the same card for both watercooled and air-cooled variants...

EDIT: the GPU-Z says Frontier edition and 16GB of HBM2. So maybe the RX version will have a smaller PCB - although since they're meant to be making both air and water cooled RX Vega I suspect they'll use the same PCB and it will be quite long...

EDIT2: GPU-Z shot also says 512GB/s, which would mean they'd got the HBM2 up to 2Gbps, which is pretty good.

Sorry I meant Vega FE.

They got the card upto 1.7GHZ for the core and 1100MHZ for the RAM. Compared to their high end custom GTX1080TI with a huge cooler,performance seems to between a GTX1080 and GTX1080TI.

Edit!!

It seems if you look at the scores using a Ryzen 7 1800X,the Vega FE water cooled edition produces around the same score as the top 10 Fire Strike scores for the GTX1080 on the 3DMark website.

Last edited by CAT-THE-FIFTH; 14-07-2017 at 04:56 PM.

There are currently 6 users browsing this thread. (0 members and 6 guests)